What is Apache Hadoop?

Apache Hadoop is an open-source software framework for distributed storage and distributed processing of very large data sets on computer clusters built from commodity hardware.

The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part called MapReduce. Hadoop splits files into large blocks and distributes them across nodes in a cluster. To process data, Hadoop transfers packaged code for nodes to process in parallel based on the data that needs to be processed(i.e. code goes to the data).

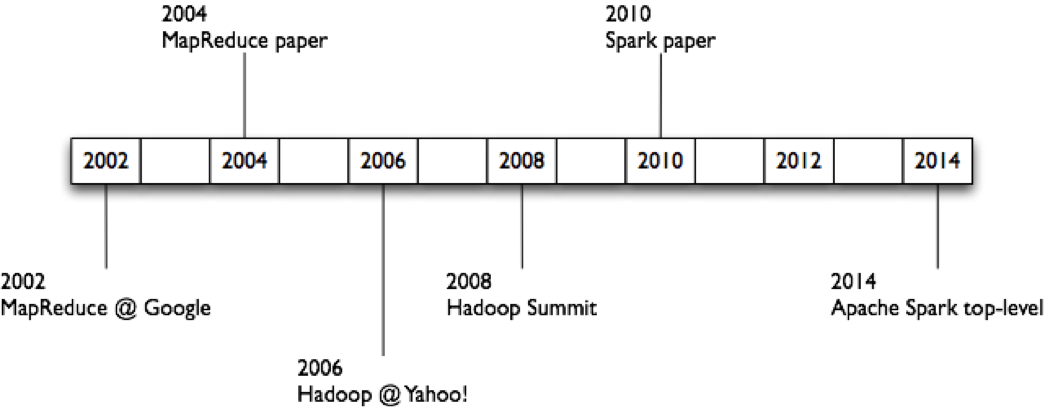

Apache Hadoop's MapReduce and HDFS components were inspired by Google papers on their MapReduce and Google File System.

What does core Apache Hadoop framework consist of?

The base Apache Hadoop framework is composed of the following modules:

- Hadoop Common – contains libraries and utilities needed by other Hadoop modules

- Hadoop Distributed File System (HDFS) – a distributed file-system that stores data on commodity machines, providing very high aggregate bandwidth across the cluster

- Hadoop Yet Another Resource Negotiator(YARN) (hadoop v2.0+) – a resource-management platform responsible for managing computing resources in clusters and using them for scheduling of users' applications

- Hadoop MapReduce – a programming model and an associated implementation for processing and generating large data sets with a parallel, distributed algorithm on a cluster.

However, Hadoop today refers to a whole ecosystem which includes additional software packages(or plugins) to Apache Hadoop like Apache Pig, Apache Hive, Apache HBase, Apache Phoenix, Apache Spark, Apache ZooKeeper, Cloudera Impala, Apache Flume, Apache Sqoop, Apache Oozie, Apache Storm, etc which help is easier ingestion, processing, storage and management of data.

Apache Hadoop Architecture

Hadoop consists of the Hadoop Common package, which provides filesystem and OS level abstractions, a MapReduce engine (either MapReduce/MR1 or YARN/MR2) and the Hadoop Distributed File System (HDFS).

Hadoop packages/jars are to be present on all the nodes in the Hadoop cluster and ssh connections(password-less ssh) is to be establish amongst them. Hadoop requires Java Runtime Environment (JRE) 1.6 or higher.

Generally, a Hadoop Cluster is managed by a Master node which is the Name Node(server to host the file system index) and also also contains the Job Tracker(server which manages job scheduling across nodes). All other nodes(slave/worker) in the cluster form the Data Nodes(the data storage) and also contain the Task Trackers(which execute the MapReduce Jobs on the Data). Additionally there is a Secondary NameNode set up which holds a snapshot of the namenode's memory structures, thereby preventing file-system corruption and loss of data.

HDFS stores large files across multiple machines. It achieves reliability by replicating the data across multiple hosts. The default replication factor(value) is 3, so data is stored on three nodes: two on the same rack, and one on a different rack. Data nodes communicate with each other to re-balance data and to keep the replication of data high.

HDFS was designed for mostly immutable files(i.e. files without updates or changes) and may not be suitable for systems requiring concurrent write-operations. This is because when a seek and update is to be done, first the location information is to be received from the name node then the file system block is to be located, a buffer chunk of blocks(usually much more than what is required) is read in and then the operation is performed. So when a specific position in a block is to be access from the HDFS, many blocks maybe read off the disk then one may expect.

The MapReduce Engine, which consists of one JobTracker, to which client applications submit MapReduce jobs. The JobTracker pushes work out to available TaskTracker nodes in the cluster, striving to keep the work as close to the data as possible. With a rack-aware file system, the JobTracker knows which node contains the data, and which other machines are nearby. If the work cannot be hosted on the actual node where the data resides (or the processing takes too long), priority is given to nodes in the same rack. This reduces network traffic on the main backbone network.

The HDFS file system is not restricted to MapReduce jobs. It maybe used with multiple other package depending on requirement. It best works for batch processing but can be used to complement a real-time system such as Apache Storm, Spark or Flink in a Lambda or Kappa Architecture.

Hadoop Distributions

Apache Hadoop - A standard open source Hadoop distribution consists of the Hadoop Common package, MapReduce Engine and HDFS.

Vendor distributions are designed to overcome issues with the open source edition and provide additional value to customers, with focus on things such as:

- Reliability - Vendors promptly deliver fixes and patches to bugs.

- Support - They provide technical assistance, which makes it possible to adopt the platforms for enterprise-grade tasks.

- Completeness - Very often Hadoop distributions are supplemented with other tools to address specific tasks and vendors provide compatible plugins to supplement plugins which maybe necessary for various tasks.

Most popular Hadoop Distributions(as of 2015):

Commercial Applications of Hadoop

Few of the commercial applications of Hadoop are(source):

- Log and/or clickstream analysis of various kinds

- Marketing analytics

- Machine learning and/or sophisticated data mining

- Image processing

- Processing of XML messages

- Web crawling and/or text processing

- General archiving, including of relational/tabular data.

Hadoop commands reference: